Iterative residual policy for dynamic manipulation

Dynamic manipulation of deformable objects

- complex dynamics (introduced by object deformation and high-speed action)

- strict task requirements (defined by a precise goal specification)

- rope whipping

- action space: target angles for two joints and angular velocity across all joints

- observation space

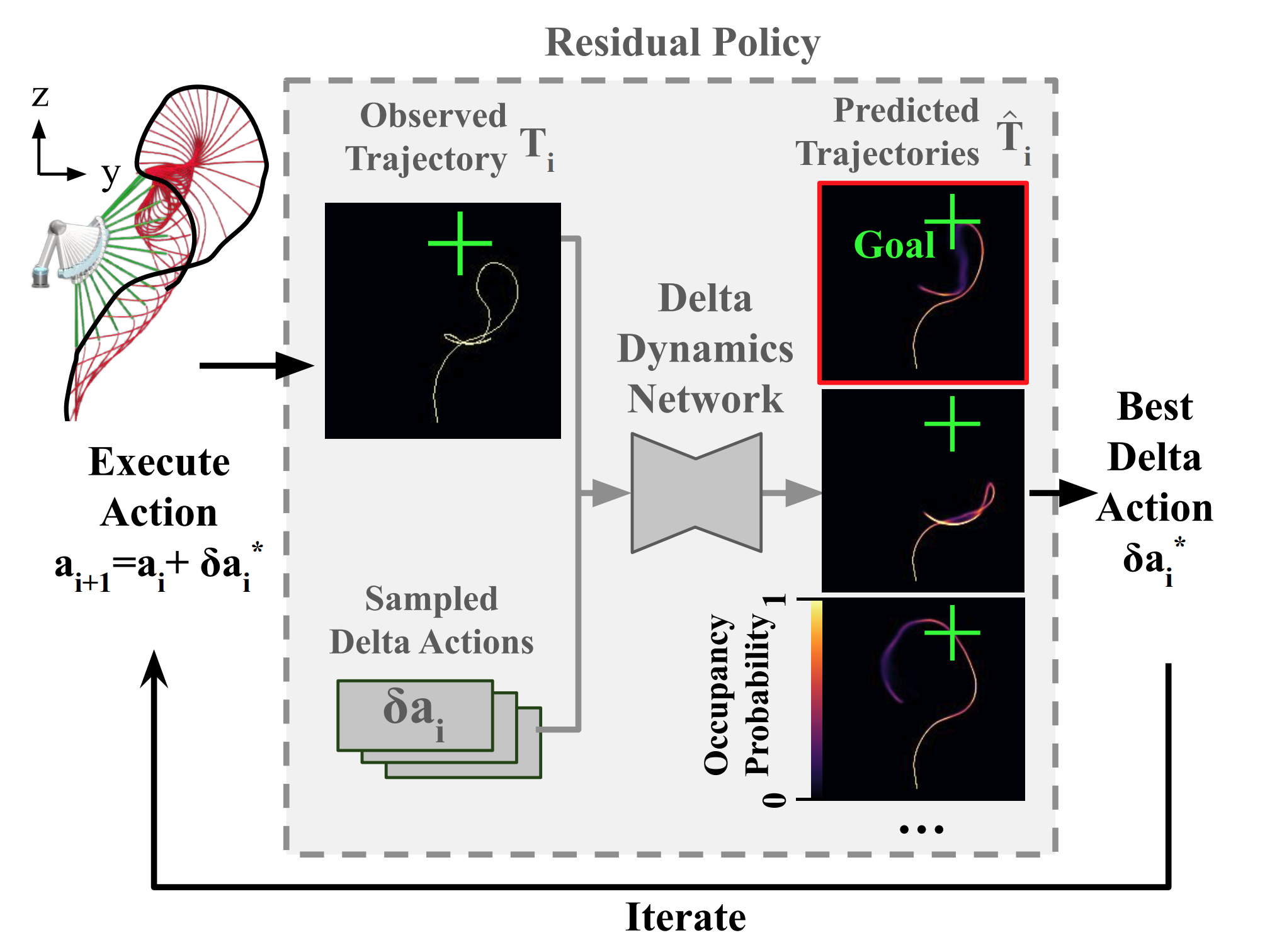

- tip trajectory as an image

- The pixel values correspond to occupancy probability

- the observed trajectories have a binary pixel

- the predicted trajectories are real-valued: between 0 to 1

Iterative Residual Policy (IRP)

- learns delta dynamics that predict the effects of delta action on the previously-observed trajectory

- metric for picking action from the predicted trajectories: min distance from any point of the tip trajectory to the goal location

- loss to train the prediction model: Binary Cross Entropy Loss of the predicted trajectory and the true trajectory with delta actions

- sample actions with a uniform distribution and delta actions with a gaussian distribution

My thoughts on IRP

- The approach still follows the concept of learning world dynamics (states + action → next states)

- train a model to predict trajectories well, and choose actions based on this

- The key differences make it useful for apply the framework to unseen environments or agents

- delta actions effectively capture the effect of the changes in actions

- (even without delta actions, the iterative or the sampling parts of the method can both still be applied)

- similar to the concept of using velocity in the states instead of previous location

- whole trajectory prediction instead of step-by-step prediction

- → this makes it easy to set the criteria for picking the best action for the next iteration

- this limits the applications on repeatable tasks with complex dynamic

- delta actions effectively capture the effect of the changes in actions

[1] Chi, Cheng, et al. "Iterative residual policy: for goal-conditioned dynamic manipulation of deformable objects." arXiv preprint arXiv:2203.00663 (2022).